Safe RL with a Teacher

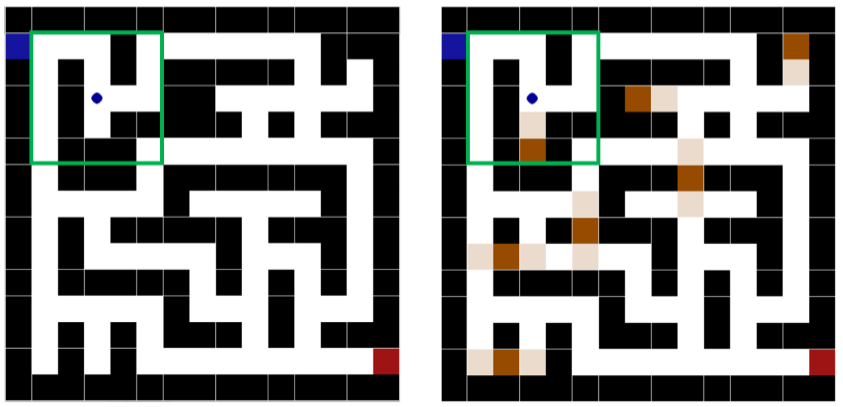

Although RL has received substantial research attention in recent years, an important existing problem is how to ensure safety in RL agents that are deployed in the real world. Safe exploration is a particularly challenging aspect of this problem since RL agents that have not experienced catastrophic states do not know to avoid them. To mitigate this problem, we propose the use of a teacher that can be queried about the safety of actions. To minimize queries to the teacher, a predictive model is developed to predict unsafe actions. Then, the teacher need only be queried when the model predicts actions to be unsafe or there is high uncertainty.